Checking for event time indexing

This task involves assessing the time resolution of events as they are indexed into the Splunk platform that Splunk ITSI (ITSI) runs on. Source type indexing events at future or past dates beyond acceptable tolerances will be identified for remediation. This procedure is valid as of ITSI version 4.15.

This article is part of the Splunk ITSI Owner's Manual, which describes the recommended ongoing maintenance tasks that the owner of an ITSI implementation should ensure are performed to keep their implementation functional. To see more maintenance tasks, click here to see the complete manual.

Why is this important?

Maintaining the health of the underlying Splunk search capability is paramount for the effective functioning of ITSI. Since ITSI heavily relies on the underlying Splunk core implementation for data processing, correlation, and predictive analysis, any issues with the quality of the underlying data in the Splunk platform can negatively impact the accuracy of the ITSI implementation.

Data ingest can sometimes be misconfigured, causing the Splunk platform to misidentify the time for an event and index it incorrectly. As ITSI uses specific time filters to retrieve data for KPI resolution, any events indexed at the incorrect time marker might not be picked up by ITSI. This can cause false readings in KPIs as the underlying KPI search does not see all the events being produced.

Accurate timestamping is critical for ITSI to function correctly and provide meaningful and accurate insights.

Schedule

Every month

Prerequisites

Appropriate search access to the underlying Splunk environment is required to perform this activity.

Notes and warnings

Some data sources might exhibit a level of timestamp drift by design. It is best to be cautious and always confirm that any inaccuracy identified in this procedure is by design rather than assuming. This is especially true for source types that only exhibit a small divergence.

How to use Splunk software for this use case

If you prefer to follow along with this procedure in video format, click here to jump to the bottom of this page.

Step 1: Check for events indexing in the future

- In the top left corner of the screen, click the Splunk logo.

- In the menu on the left-hand side of the screen, select IT Service Intelligence.

- In the menu at the top of the page, click Search.

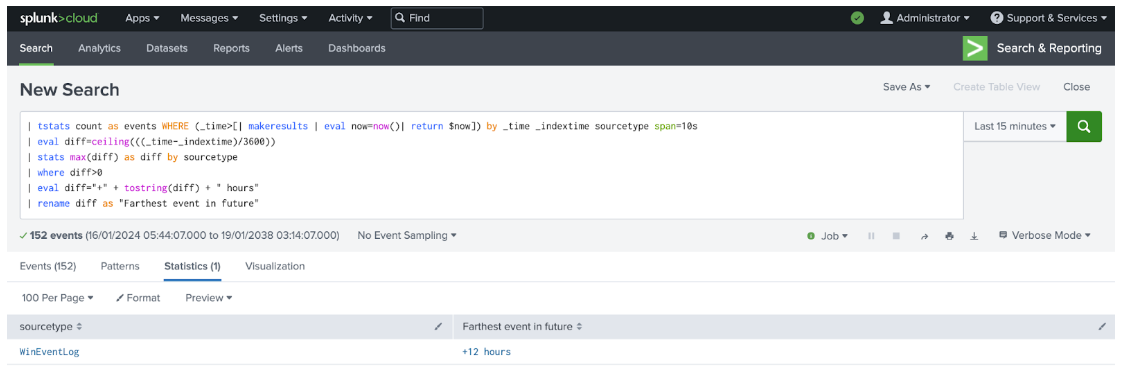

- Copy the following search into the search bar and press Enter to run the search.

| tstats count AS events WHERE (_time>[| makeresults | eval now=now()| return $now]) BY _time _indextime sourcetype span=10s | eval diff=ceiling(((_time-_indextime)/3600)) | stats max(diff) AS diff BY sourcetype | where diff>0 | eval diff="+" + tostring(diff) + " hours" | rename diff AS "Farthest event in future"

- Look at the search results below the search bar, and record all source types listed in the sourcetype column. These represent every source type that receives events that resolve at a future date.

- The recorded source types should have their ingest time configuration modified to resolve the future timestamp issues. Collaborate with your Splunk platform administrator to facilitate this resolution. If you require assistance with this activity, contact your Splunk account team to engage professional services support. You can also find information on configuring timestamp recognition for event ingestion in Splunk Help.

Step 2: Check for events indexing in the past

- If you are still on the results page from the previous activity, proceed to the next step, Otherwise, repeat steps 1 and 2 of the previous activity, then proceed to the next step.

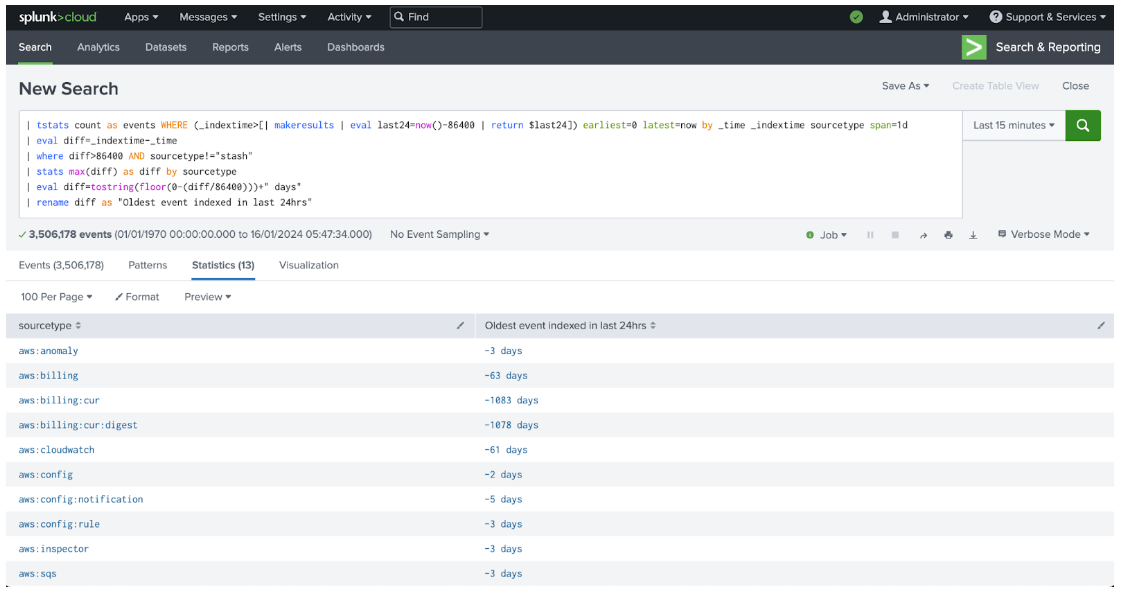

- Copy the following search into the search bar and press Enter to run the search.

| tstats count AS events WHERE (_indextime>[| makeresults | eval last24=now()-86400 | return $last24]) earliest=0 latest=now BY _time _indextime sourcetype span=1d | eval diff=_indextime-_time | where diff>86400 AND sourcetype!="stash" | stats max(diff) AS diff BY sourcetype | eval diff=tostring(floor(0-(diff/86400)))+" days" | rename diff AS "Oldest event indexed in last 24hrs"

- Look at the search results below the search bar, and record all source types listed in the sourcetype column. These represent every source type that indexes events that are resolved at a date further than 24 hours in the past.

- The recorded source types should have their ingest time configuration modified to resolve the past timestamp issues. Collaborate with your Splunk platform administrator to facilitate this resolution. If you require assistance with this activity, contact your Splunk account team to engage professional services support. You can also find information on configuring timestamp recognition for event ingestion in Splunk Help.

Video walk-through

In the following video, you can watch a walk-through of the procedure described above.

Additional resources

These resources might help you understand and implement this guidance:

- Splunk Help: Configure timestamp recognition