Configuring ITSI correlation searches for monitoring episodes

You have Splunk ITSI episodes being created in ITSI from Splunk Observability Cloud alerts, so now you want to create two important episode monitoring correlation searches:

- The first controls when a Splunk On-Call incident should be created.

- The second determines if the episode’s severity should be elevated or degraded due to incoming notable events into the episode, and if the episode should be closed due to self-healing criteria.

Before you follow these steps, make sure you have done the following:

- Integrated Observability Cloud alerts with Splunk Cloud Platform or Splunk Enterprise}

- Normalized Observability Cloud alerts into the ITSI Universal Alerting schema

- Configured Universal Correlation Searches to create notable events

- Configured the ITSI Notable Event Aggregation Policy (NEAP)

Solution

The diagram below shows the overarching architecture for the integration that's described in Managing the lifecycle of an alert: from detection to remediation. The scope for this article is indicated by the pink box in the diagram.

In this article, you'll learn how to use the Content Pack for ITSI Monitoring and Alerting to create episode monitoring correlation searches. The Content Pack provides many examples of these searches, but this article will explore two critical ones to start with.

The two episode monitoring correlation searches evaluate all open episodes and create new notable events when a new Splunk On-Call incident needs to be created or when an episode state change occurs. These new notable events become part of the associated episode.

Next, the Splunk ITSI rules engine, which runs the notable event aggregation policy, applies action rules against the newly created notable events. If the action rule's specific activation criteria matches against the notable event data, then an action (such as creating a Splunk On-Call incident) is performed as defined in the action rule.

Procedure

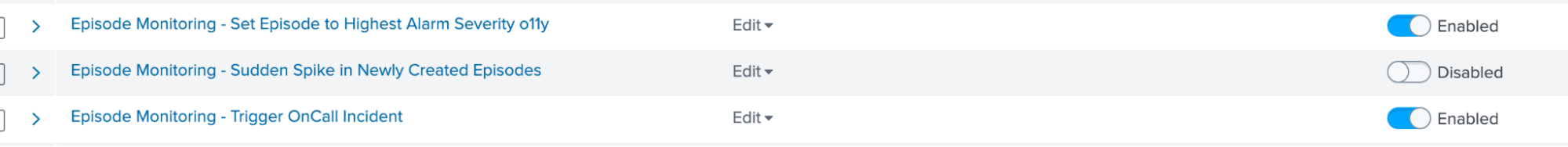

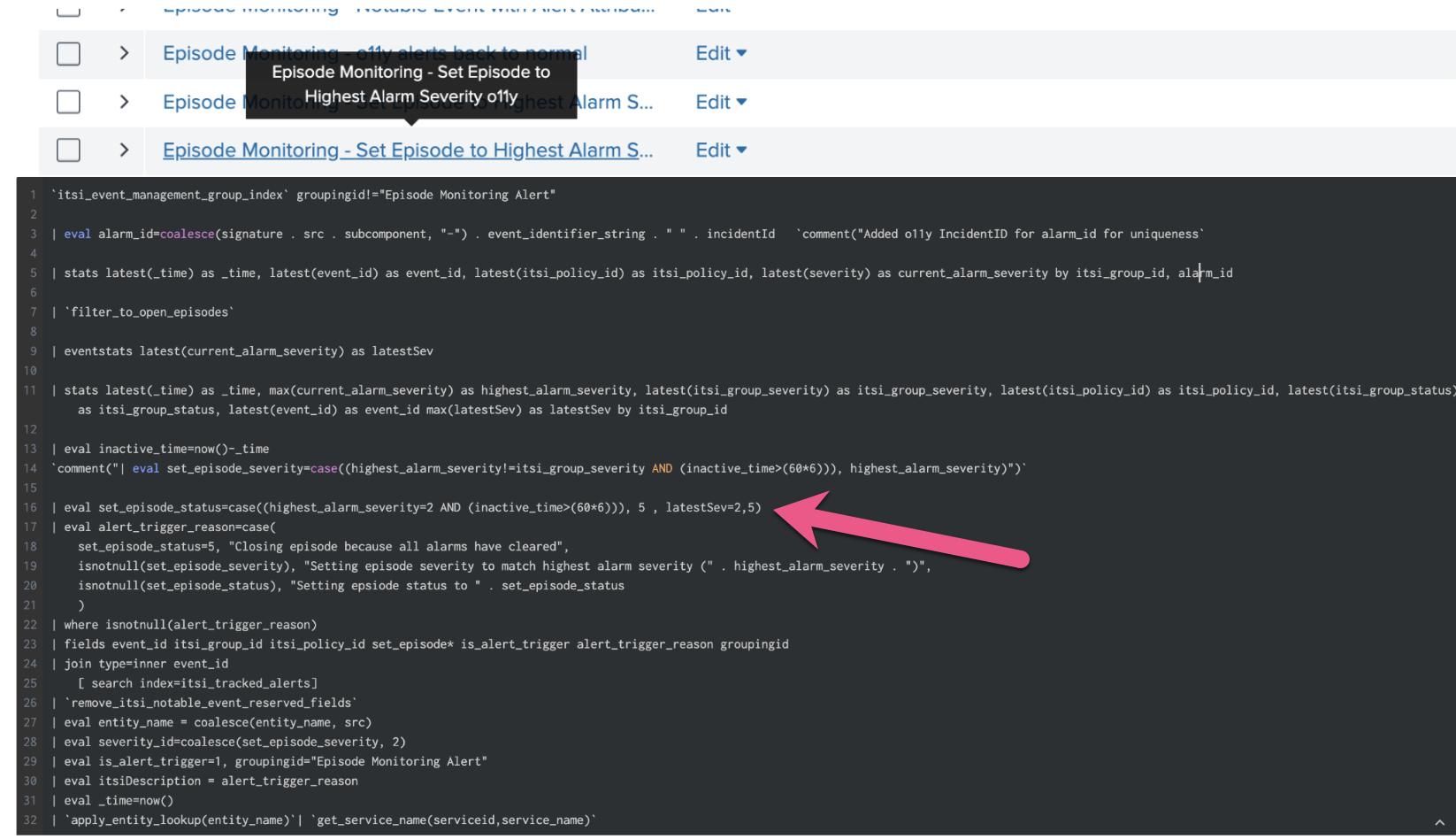

- Download this ITSI Backup file and use the ITSI Backup/Restore utility to restore and enable the two correlation searches in your instance of Splunk ITSI - "Episode Monitoring - Set Episode to Highest Alarm Severity o11y" and "Episode Monitoring - Trigger OnCall Incident".

- On the Correlation Searches page, find these two searches.

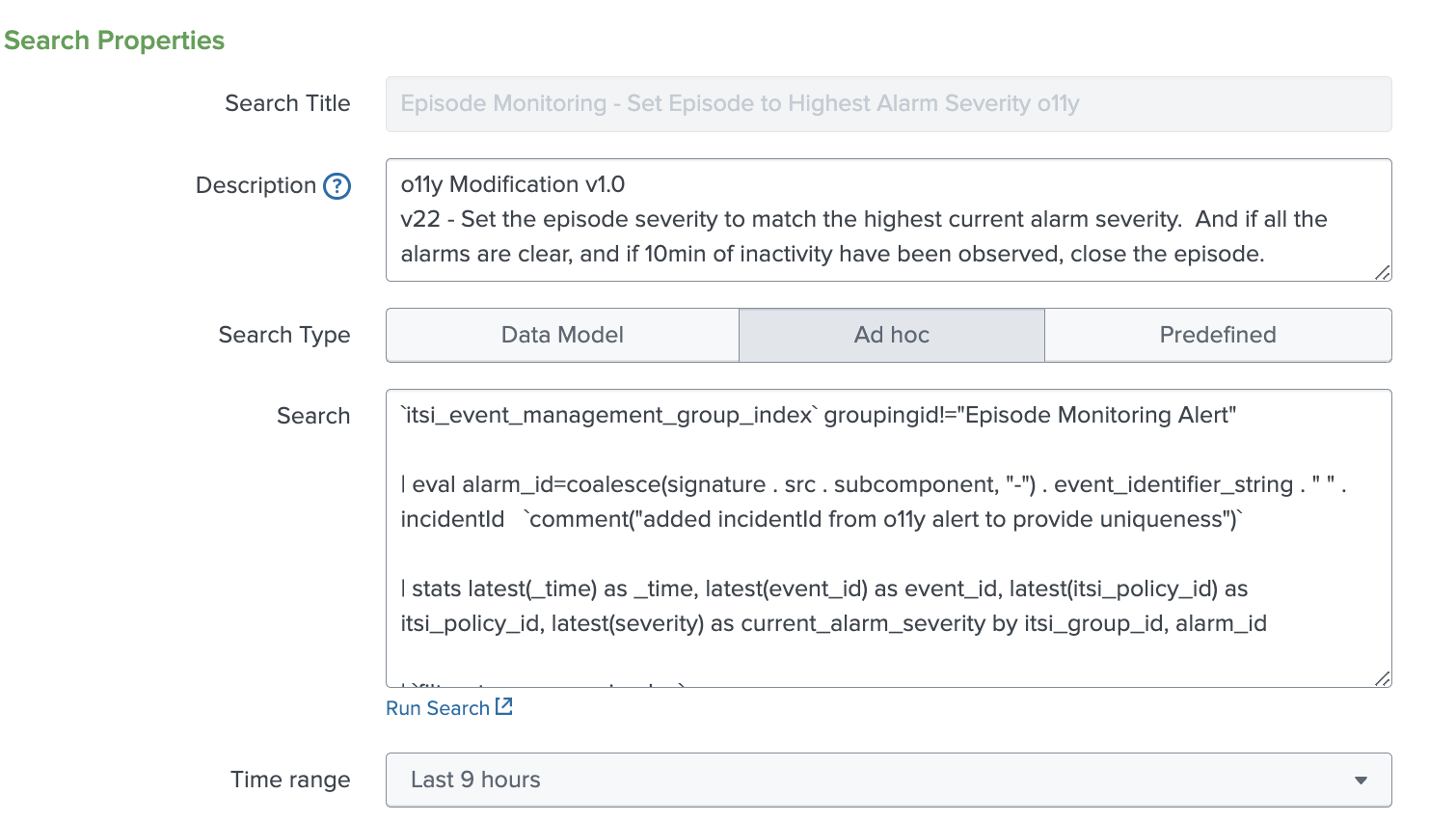

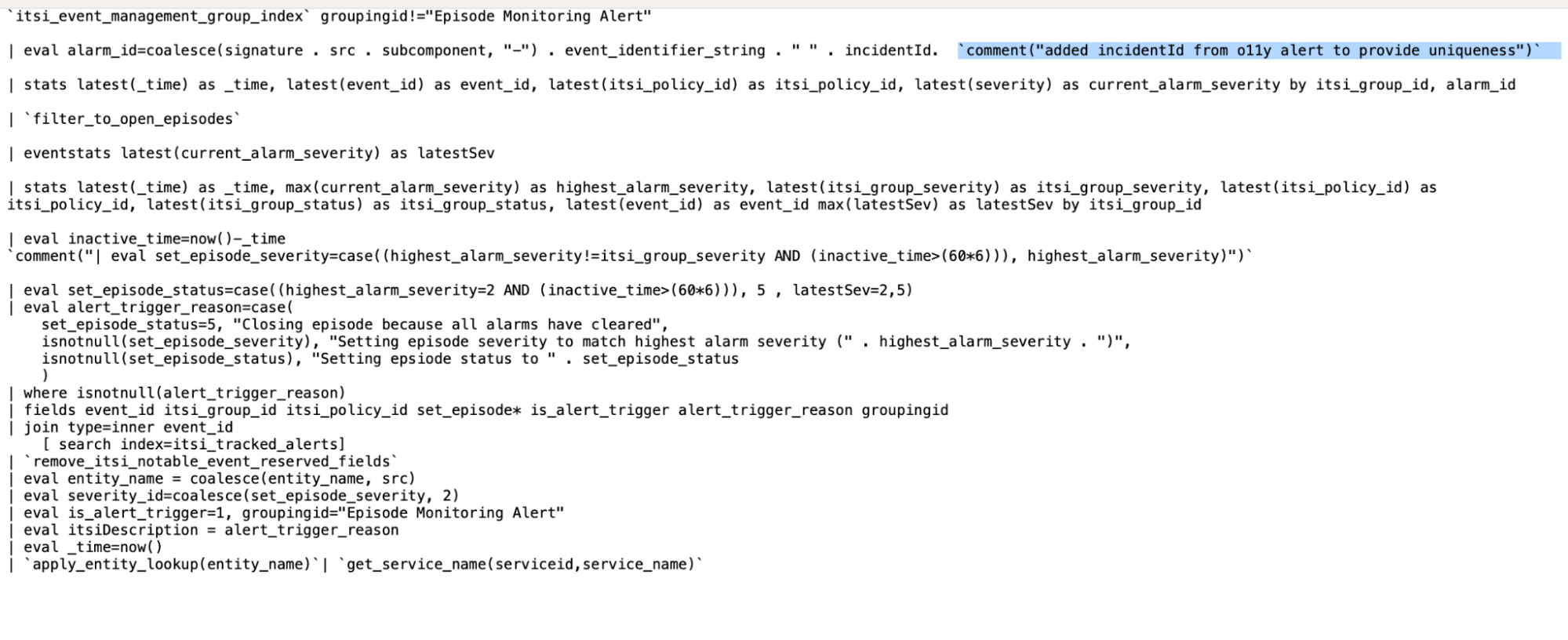

- Review the search called "Episode Monitoring - Set Episode to Highest Alarm Severity o11y". This search is a clone of the standard content pack correlation search called "Episode Monitoring - Set Episode to Highest Alarm Severity". It's a good practice to clone the correlation search and make notes to indicate the version cloned and any changes that you have made.

The comment highlighted in the correlation search code below where the Splunk Observability Cloud alert incidentId has been added to make the alarm_id unique. IncidentId in Splunk Observability Cloud refers to the unique lifecycle of a detector-generated alert.

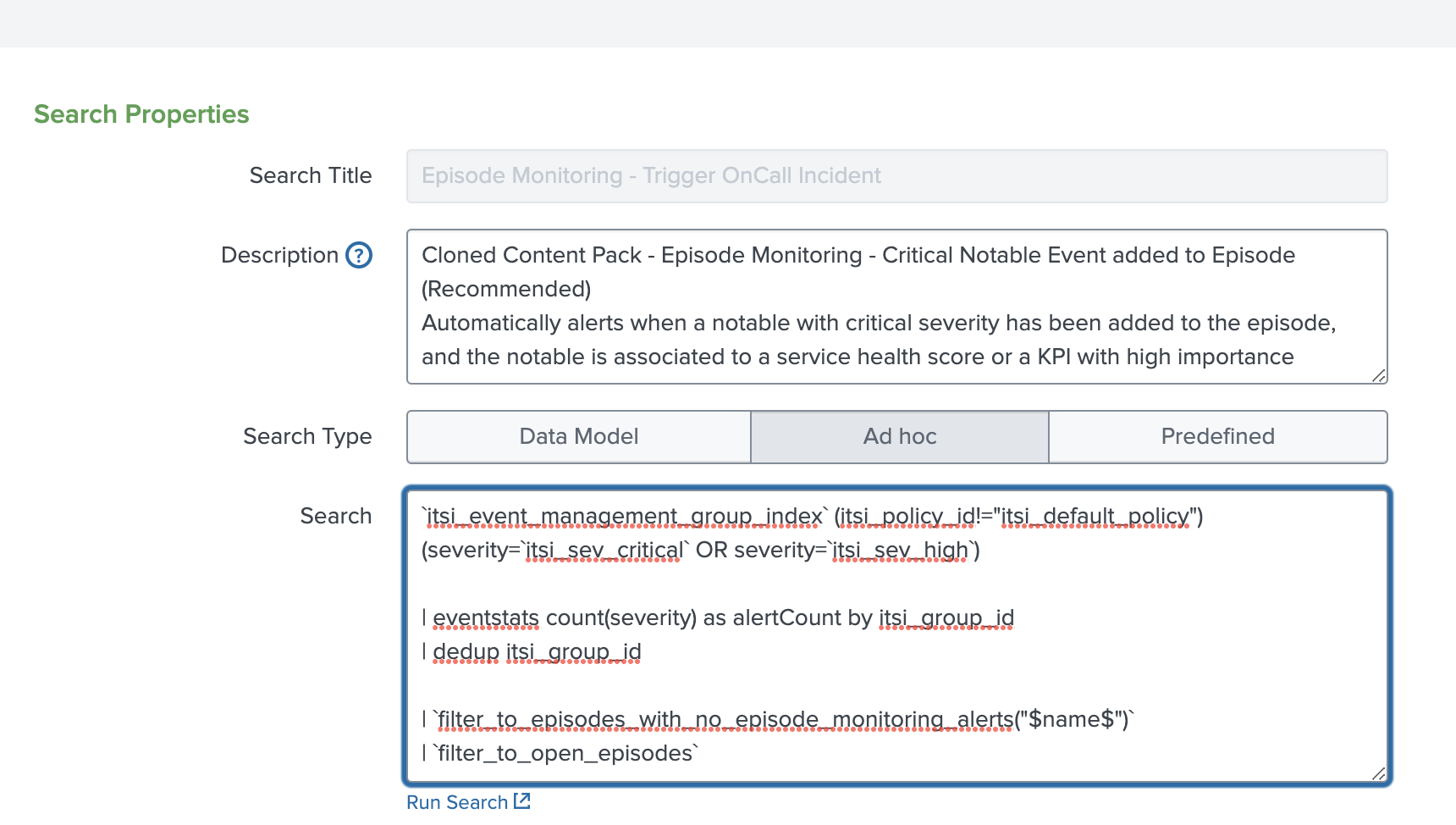

- Review the search called "Episode Monitoring - Trigger OnCall Incident-o11y". This is a clone of the standard content pack correlation search called "Episode Monitoring - Critical Notable Event Added to Episode". It creates a notable event when one of the notable events in the episode meets a specific severity level, allowing you to open a new Splunk On-Call incident as part of the integration workflow. It also triggers a closing of the Splunk On-Call incident if the Splunk Observability Cloud alert has been cleared or gone back to a normal state.

The correlation search determines if a notable event should be created, indicating that the original Splunk Observability Cloud alert has been cleared. In the screenshot of the correlation search code below, the pink arrow indicates the part of the code that states if this occurs the status is set to 5, which causes the Splunk ITSI notable event aggregation policy to run an action to automatically close the episode and resolve the incident.

Next steps

Now that you’ve successfully configured the correlation searches, continue to the next article to configure the Splunk On-Call integration with Splunk ITSI.

Still having trouble? Splunk has many resources available to help get you back on track.

- Splunk Answers: Ask your question to the Splunk Community, which has provided over 50,000 user solutions to date.

- Splunk Customer Support: Contact Splunk to discuss your environment and receive customer support.

- Splunk Observability Training Courses: Comprehensive Splunk training to fully unlock the power of Splunk Observability Cloud.

-