Managing the lifecycle of an alert: from detection to remediation

Many organizations experience significant challenges with the alerting process, ranging from overwhelming volumes of alerts leading to fatigue and missed critical incidents, to a lack of contextual information that prevents effective prioritization and response. Inadequate workflows and communication channels can also prevent efficient alert triage and resolution.

To overcome these hurdles, it's important to adopt a comprehensive approach to managing the lifecycle of an alert, encompassing detection, triage, investigation, and remediation. An effective workflow can help you to drive significant improvements in mean time to detect (MTTD) and mean time to respond (MTTR) to incidents that can cause you significant operational issues.

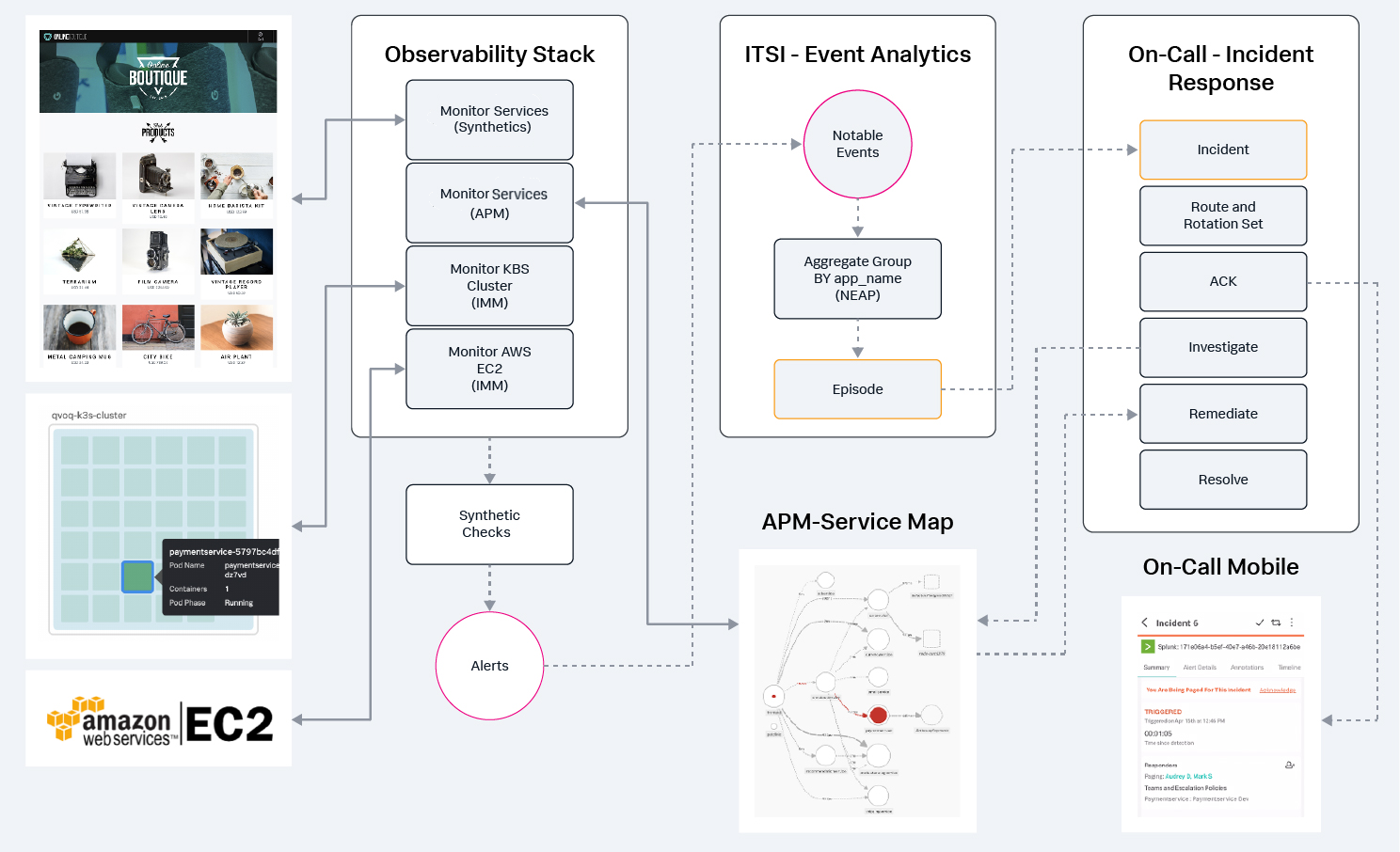

This use case spans a number of different Splunk products that work together to produce a complete alert management workflow. The workflow uses events generated in Splunk Observability Cloud, making them available for use in Splunk ITSI. These alerts are normalized, sorted, and grouped into episodes that can be seen through Episode Review in Splunk ITSI. Splunk On-Call is also integrated so that when incidents occur, the right teams can be quickly notified. This workflow is demonstrated in the following image.

For a comprehensive overview of the event analytics workflow as it relates to Splunk ITSI, read the Splunk Docs Overview of Event Analytics.

Data required

Prerequisites

- Download and install the Content Pack for ITSI Monitoring and Alerting. The preconfigured correlation searches and notable event aggregation policies in the content pack help you to produce meaningful and actionable alerts.

- Download the ITSI Backup file and use the ITSI Backup/Restore utility to restore this into your version of Splunk ITSI. The backup file contains a number of modified correlation searches and notable event aggregation policies that are used in the workflow laid out below.

How to use Splunk software for this use case

The articles in this use case are intended to flow and build upon each other, although they can stand on their own for a specific capability you might be interested in.

- Integrate Splunk Observability Cloud Alerts with Cloud Platform or Enterprise. How to configure Splunk Observability Cloud to send alerts from detectors to a Splunk Cloud Platform or Splunk Enterprise event index that Splunk ITSI is using, making them available for Splunk ITSI to use.

- Normalize Observability Cloud alerts into the ITSI Universal Alerting schema. How to normalize the data to ensure it adheres to the ITSI Universal Alerting schema. This ensures that the Universal Correlation Search you'll create later can create notable events in Splunk ITSI from your Splunk Observability Cloud events.

- Configure a Universal Correlation Search to create notable events. How to use the Content Pack for ITSI Monitoring and Alerting to configure the Universal Correlation Search that processes Splunk Observability Cloud alerts and onboards them as notable events in Splunk ITSI.

- Configure the ITSI Notable Event Aggregation Policy (NEAP). How to configure and enable the Notable Event Aggregation Policy (NEAP) to process notable events so they can be grouped into Splunk ITSI episodes.

- Configure ITSI correlation searches for monitoring episodes. How to configure correlation searches in the Content Pack for ITSI Monitoring and Alerting to monitor the notable events contained in a Splunk ITSI episode. This allows Splunk ITSI to create a notable event for the episode that triggers an action rule in the Notable Event Aggregation Policy (NEAP), so actions can be taken such as the creation or resolution of a Splunk On-Call incident, or a change to the severity of the episode.

- Configure the Splunk On-Call integration with IT Service Intelligence. How to configure an action in the ITSI Notable Event Aggregation policy to automatically create and resolve an incident in Splunk On-Call, as well as auto-close the Splunk ITSI episode to keep them synchronized.

- Configure action rules in the ITSI Notable Event Aggregation Policy for Splunk On-Call Integration. How to configure action rules in your Notable Event Aggregation Policy that create the Splunk On-Call incident and also auto-close the incident when the Splunk ITSI correlation search has determined the episode is healed.

- Investigate and remediate alerts from web applications. How to ensure that the right support team is notified when your web or mobile applications degrade, so they can quickly determine root cause and remediate, bringing the service back to normal.

Next steps

This event analytics and incident management configuration and design framework helps drive operational excellence and value through significant improvements in mean time to detect (MTTD) and mean time to respond (MTTR) to the incidents that can cause you significant operational issues.

Still having trouble? Splunk has many resources available to help get you back on track.

- Splunk Answers: Ask your question to the Splunk Community, which has provided over 50,000 user solutions to date.

- Splunk Customer Support: Contact Splunk to discuss your environment and receive customer support.

- Splunk Observability Training Courses: Comprehensive Splunk training to fully unlock the power of Splunk Observability Cloud.