Building an Edge Processor container (Cloud)

As this series on scaling Edge processors is focused on Kubernetes generally and Amazon EKS specifically, we’re going to build and use Docker images throughout. While it’s important to understand that the Splunk platform does not manage or release any Docker images for Edge Processor, we will build our own in this step.

Build a generic Edge Processor node container

Because we can’t embed the binaries and configuration settings into the container, we need to run the predefined bootstrap script and provide it configuration variables. In order to start the containers in a way where we get the most recent binaries and to provide a generic container to be used with specific Edge Processor group configurations, we’ll use a long-running entrypoint script pattern for building and running our container.

If you’ve never worked with Docker or building containers before, there are good resources online to get up to speed.

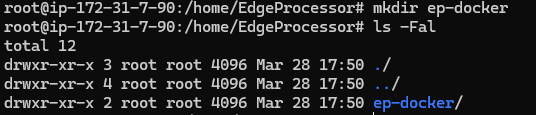

Here are the steps to build our container:

| First we need a clean directory to work in on a Linux server. This directory will hold the Dockerfile that defines our container, as well as possibly some supplemental files that will be covered later. Specific naming of the working directory isn’t important here. |  |

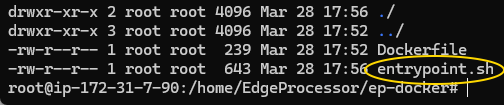

| Create a file called Dockerfile and include the content to the right. It’s not necessary to use Ubuntu. Any supported OS can be used. Additionally, note that curl is installed because it’s used in the entrypoint script to download the Edge Processor bootstrap file. You can replace curl and the script reference to curl with whatever binary you would like to download the file. | FROM ubuntu:20.04 |

| Download the entrypoint script here and place it in the working directory. |  |

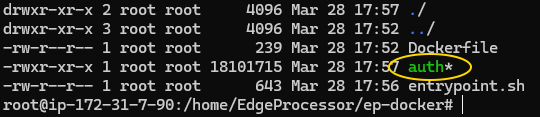

| Place the auth binary from the previous article into the working directory. |  |

|

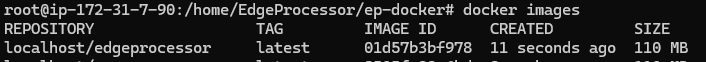

With those files in place, we can build our Docker image, which creates the image locally. We can use that for testing. |

docker build -t edgeprocessor .

|

Run and test the container

Now that we have a container we can run it locally to test that it is working. Looking at the entrypoint script, we can see that it works using the following environment variables, which should look familiar from the review of the authentication and install script articles.

$GROUP_ID: The Edge Processor group id for the node |

|

You can now run the container locally to test the behavior.

docker run \ -e GROUP_ID=<your edge processor group id> \ -e SCS_TENANT=<your scs tenant name> \ -e SCS_ENV=production \ -e SCS_SP=<your principal service principal> \ -e SCS_PK=<your principal private key> \ edgeprocessor

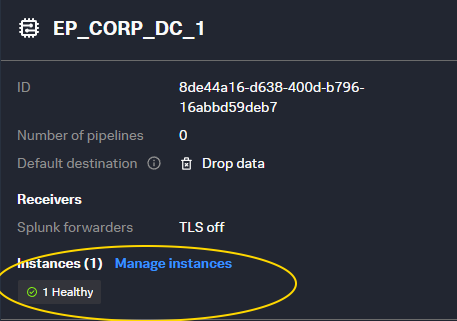

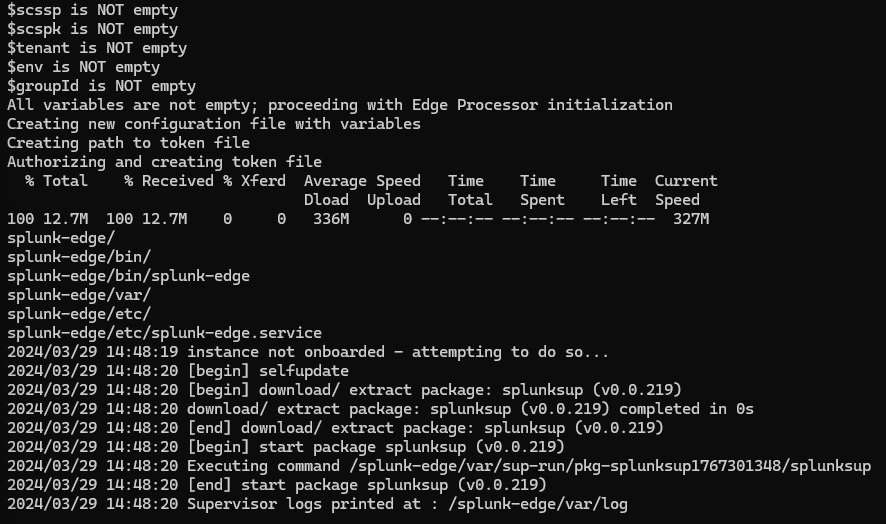

Your terminal will output status information indicating the container logs, and your Edge Processor console will show your new instance.

|

|

Clean up after testing

After we see that the container is able to run and the node properly registers for the Edge Processor, you can terminate your testing docker container. It’s important to properly terminate the container so that the offboard command from the entrypoint script runs; otherwise, you will end up with an orphaned instance.

Get the container ID of your running container.

docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 4731ae03b665 localhost/edgeprocessor:latest 8 seconds ago Up 9 seconds ago adoring_franklin

Issue a terminate command to that container.

docker exec 4731ae03b665 sh -c 'kill $(pidof splunk-edge)'

Closing notes

- Troubleshooting containers and this process is out of the scope of this article, and troubleshooting containers in general can be a laborious task. All of the tools provided in this article have debug flags and can be run manually from within the container.

- The Splunk Cloud Platform service principal private key is a JSON payload. You almost always have to wrap the JSON in single quotes (‘) when working with it in the terminal or in environment variables. This is the most common mistake in this process.

- The container image we created is only stored locally on the server we’re using to build the container. For use in an enterprise environment such as EKS, OpenShift, Rancher, or others you will need to push this image into a repository that can be referenced within your deployment manifests.

Next steps

Now that we have a good working container and can pass in the proper configuration variables, we can start building our Kubernetes configurations to support a scalable deployment.